CDN for Public Data

Components

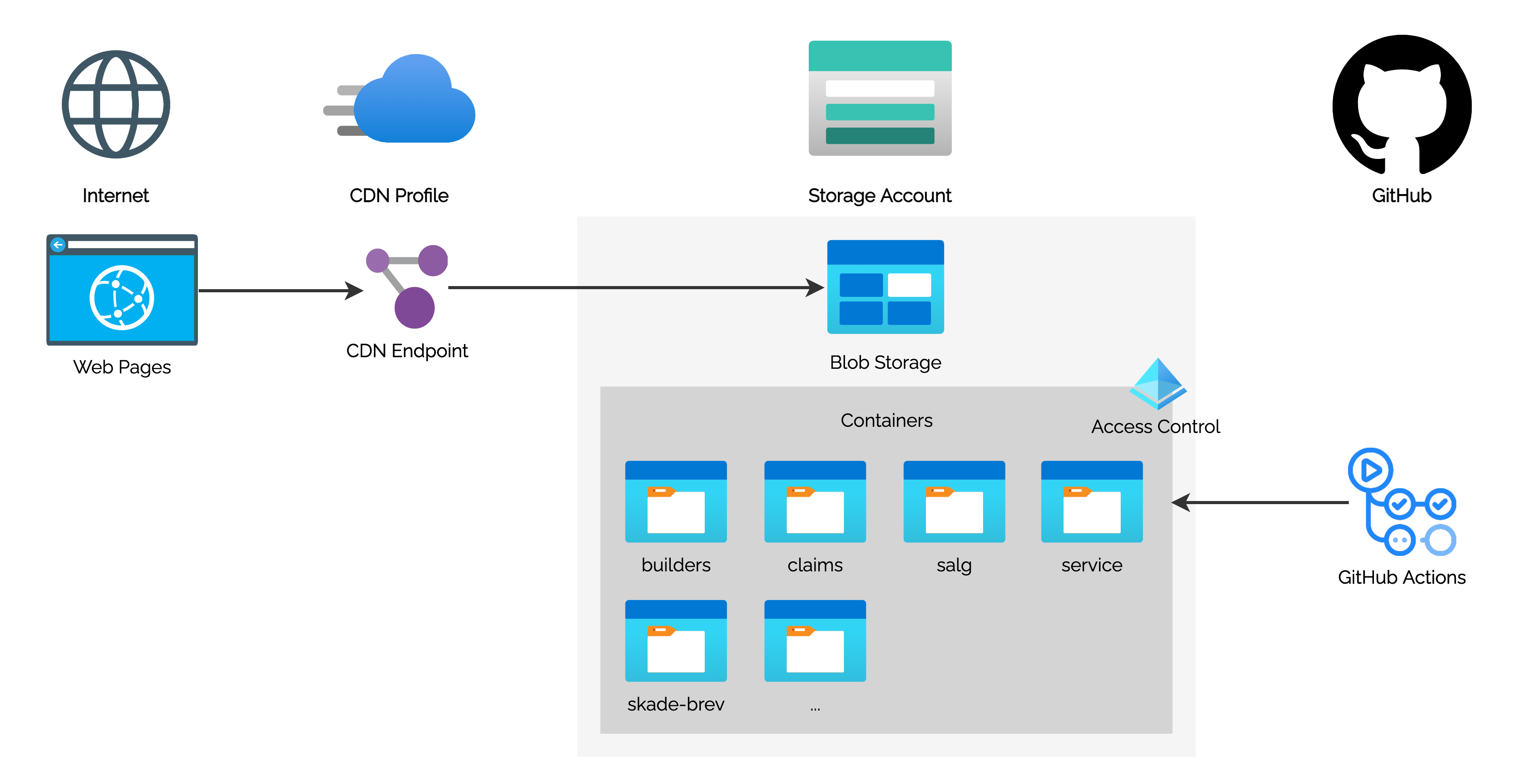

The CDN endpoint uses a storage account as the origin server. This means that for any request received by the CDN endpoint, if the requested resource is not found in cache, the CDN Endpoint will try to fetch it from the storage account.

This solution uses a single storage account with separate Blob Storage Containers for each team.

Files can be uploaded to the Blob Storage Containers by using GitHub Actions workflows.

Authentication

To upload files to the blob storage from your GitHub Actions workflows, you'll need to authenticate using OpenID Connect. Follow the self-service steps in OpenID Connect (OIDC) for Azure Authentication to provision a Service Principal (and GitHub Actions secrets) for your repository.

Create a Storage Container

Storage Containers and role assignments are created and managed with Terraform in terraform-publicdata.

Example:

data "azuread_group" "demo_repos_azure_oidc" {

name = "RBAC_AAD_DEMO_GITHUB_REPOS_TEST"

}

module "common" {

source = "../../modules/common"

...

container_configs = {

...

"demo" = {

contributors = [

data.azuread_group.demo_repos_azure_oidc.object_id,

]

},

}

}

The repository contains separate configurations for each environment. Locate main.tf for the environment you want to add a new container to, and add a new key-value pair to the map for module.common.container_configs.

- The key should be the name of the container

- The value should be an object specifying contributors. Use a data source to get the object ID of your service principal or an Azure AD group that it belongs to.

Upload files

Use az storage blob to upload files as part of an automated workflow.

Example with az storage blob upload-batch:

name: "Build and deploy"

on:

push:

branches:

- main

permissions:

id-token: write

jobs:

build:

runs-on: ubuntu-latest

steps:

- name: Git checkout

uses: actions/checkout@v2

...

- name: Login to Azure

uses: azure/login@v1

with:

client-id: ${{ secrets.AZURE_TEST_OIDC_CLIENT_ID }}

tenant-id: ${{ secrets.AZURE_TEST_TENANT_ID }}

subscription-id: ${{ secrets.GTM_SUBSCRIPTION_ID }}

- name: Upload files to storage container

uses: azure/cli@v1

with:

inlineScript: >-

az storage blob upload-batch

--account-name mystorageaccount

--auth-mode login

--source $GITHUB_WORKSPACE/dist

--destination demo

--destination-path my-app

- Login (Required)

Client ID, Tenant ID and Subscription ID for the Service Principal associated with an OIDC Federated Identity Credential. --account-name(Required)

Storage account name. You can look up correct storage account name from CDN for Public Data - Gjensidige Confluence.--source(Required)

The directory where the files to be uploaded are located.--destination(Required)

The blob container where the files will be uploaded.--destination-path(Optional)

The destination path that will be prepended to the blob name.

Reference: az storage blob

Caching Behavior

A file remains cached on an edge server until the time-to-live (TTL) specified by its HTTP headers expires. If no TTL is set on a blob, Azure CDN will automatically apply a default TTL of two days, unless there are custom caching rules set up for the resource.

Custom Caching Rules

Some resources, matching specific file names (for example manifest.json), are set to bypass cache. See terraform-publicdata/modules/common/main.tf.